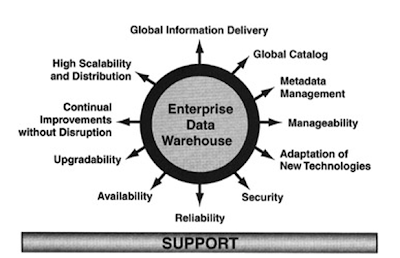

The following figure shows characteristics of a high-performance data warehouse. It shows that this data warehouse supports a large number of users. Most users issue less complex queries without any performance issues and have a low data warehouse operations/maintenance time requirement, making this data warehouse highly available. Under this environment, everyone is satisfied with the data warehouse performance. In reality, this seldom happens.

The number of users, is not a good indication of data warehouse performance. One hundred users may be issuing simple queries and not have a problem until one analyst starts a complex analytical job that virtually joins a large number of tables to do complex sales trend analysis. This exercise will probably block the data warehouse server for hours. Data warehouse architects must design governing features to control such runaway resource-consuming processes during peak hours.

The consequence of not having complete order operation information in the data warehouse is that the planning, finance, sales, and marketing organizations will not have a full view of corporate operations, such as product inventories and what to stock to fulfill consumers' demands.

The performance issue here is that extracting complete data sets from OLTP and loading that information in a data warehouse all in one step can consume significant OLTP and data warehouse resources, such as the locking up of source and target data objects, network bandwidth, and CPU/memory usage.

Solution is to keep the data extraction process out of the daily OLTP maintenance operation and break down large data extraction processes into multiple tasks. Each task is scheduled several times during regular OLTP business operations to extract and move new data in the data warehouse in an operational data store. Then refresh the data warehouse once or twice a day by combining all incremental data sets from the operational data store without touching the OLTP systems. (1.4)

ABAP TOPIC WISE COMPLETE COURSE

BDC OOPS ABAP ALE IDOC'S BADI BAPI Syntax Check

Interview Questions ALV Reports with sample code ABAP complete course

ABAP Dictionary SAP Scripts Script Controls Smart Forms

Work Flow Work Flow MM Work Flow SD Communication Interface