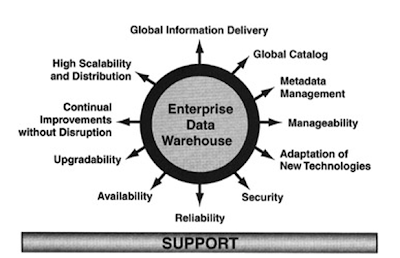

Global Information Delivery

The Internet and intranets are the primary vehicles for information delivery. There must be robustness (scalability, security, reliability, and cost) of Web services needed to deliver information to end users. It is critical that such Web services provide seamless integration and provide similar development/management environments with the rest of the data objects in all data warehouse layers.

Global Catalog

Global catalog goes hand in hand with the information delivery services. It is hard to find what you need because catalogs are based on an individual data warehouse. End users spend a lot of time finding what they need. The support of one global catalog is a key component of a global information delivery system.

Metadata Management

Metadata is information about the data such as data source type, data types, content description and usage, transformations/derivations rules, security, and audit control attributes. Access to metadata is not limited to the data warehouse administrator but must also be made available to end users. For example, users want to know how revenue figures are calculated. Metadata defines rules to qualify data before storing it in the database. The end result is a data warehouse that contains complete and clean data.

Manageability

At present scenario data objects are distributed across the world and also reside on end-user workstations (laptops), it is a difficult to manage such environments. As part of its Customer Relationship Management (CRM) initiative, SAP is planning a Mobile Sales Automation (MSA) server that integrates and manages data between the SAP Business Information Warehouse and the data sets on a salesperson's laptop.

Adaptation of New Technologies

Implementation of an enterprise data warehouse is usually a multi-year project. echnically, as long as you have built your data warehouse based on an architecture using accepted industry standard APIs, you should be able to incorporate emerging technologies without extensive reengineering.

If your existing data warehouse environment uses open APIs, you can easily join information from multidimensional and relational data sources using ODBC. ODBO, for example, integrates multidimensional data from SAP BW and Microsoft's OLAP server. It shall be made sure that the data warehouse can adopt new emerging technologies with the least amount of work.

Security

A data warehouse environment must support a very robust security administration by using roles and profiles that are information object behavior-centric rather than pure database-centric. For example, a role such as cost center auditor defined in a data warehouse allows one to view cost center information for a specific business unit for a given quarter but not to print or download cost center information to the workstation.

Reliability and Availability

New data warehouse construction products provide methods to make systems highly available during data refresh. Products like SAP BW refresh a copy of an existing data object for incoming new data updates while end users keep using the existing information objects. However, when a new information object is completely refreshed, all new requests are automatically pointed to the newly populated information object. Such technologies must be an integral component of enterprise data warehouses.

Upgradability and Continual Improvements

If any component of a data warehouse (database management system, hardware, network, or software) needs upgrades, it must not lock out users, preventing them from doing their regular tasks. Moreover, any time a new functionality is added to the environment, it must not disrupt end-user activities. One can apply certain software patches or expand hardware components (storage) while users are using the data warehouse environment. During such upgrades, end users may notice some delays in retrieving information, but they are not locked out of the system.

Scalability and Data Distribution

Due to large data volume movement requirements, data warehouses consume enormous network resources (four- to five-fold more than a typical OLTP transaction environment). In an OLTP environment, one can predict network bandwidth requirements because data content associated with each transaction is somewhat fixed. In data warehousing, it is very hard to estimate network bandwidth to meet end-user needs because the data volume may change for each request based on data selection criterion. Large data sets are distributed to remote locations across the world to build local data marts. The network must be scalable to accommodate large data movement requests.

To build dependent data marts, you need to extract large data sets from a data warehouse and copy them to a remote server. A data warehouse must support very robust and scalable services to meet data distribution and/or replication demands. These services also provide encryption and compression methods to optimize usage of network resources in a secured fashion.

This can be demonstrated as shown below.

ABAP TOPIC WISE COMPLETE COURSE

BDC OOPS ABAP ALE IDOC'S BADI BAPI Syntax Check

Interview Questions ALV Reports with sample code ABAP complete course

ABAP Dictionary SAP Scripts Script Controls Smart Forms

Work Flow Work Flow MM Work Flow SD Communication Interface

No comments:

Post a Comment